Mountain Car and Pendulum Policy Learning - Control Systems

Q-Learning for Mountain Car and Pendulum Control

This project implements Q-learning algorithms to solve two reinforcement learning benchmarks: the Mountain Car and Inverted Pendulum environments. Key deliverables included state-action space discretization, epsilon-greedy exploration, and Bellman equation updates to learn optimal policies without prior knowledge of environment dynamics. The system achieved target rewards through iterative Q-table optimization and adaptive learning rate strategies.

Objectives

- Implement Q-learning with discrete state-action spaces for continuous control tasks.

- Achieve target rewards: ≥90 (Mountain Car) and ≤-300 (Pendulum) over 100 test episodes.

- Design adaptive ε-greedy policies to balance exploration/exploitation.

- Develop state discretization methods for continuous observations (position, velocity, angle).

- Validate algorithm robustness through learning rate tuning and reward convergence analysis.

Project Process

-

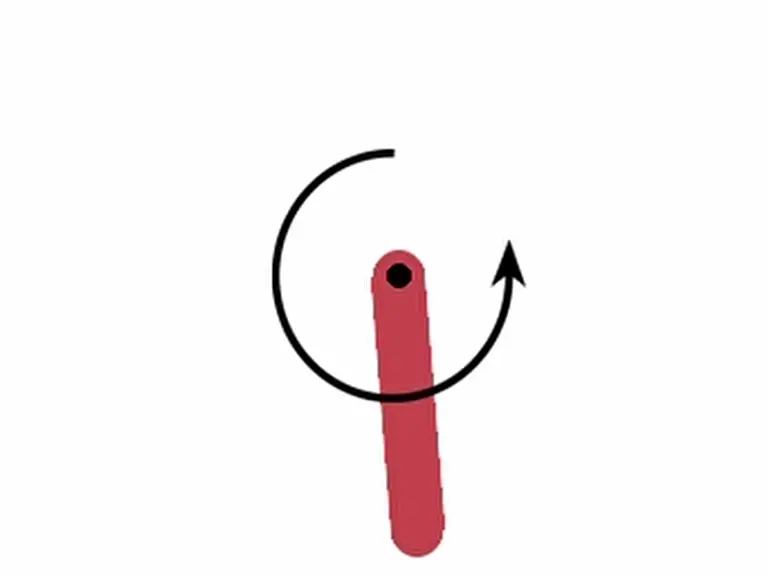

Environment Configuration:

Integrated Gymnasium environments with state bounds:

- Mountain Car: Position [-1.2, 0.6], Velocity [-0.07, 0.07]

- Pendulum: Angle [-π, π], Angular Velocity [-8, 8]

- State Discretization: Created 50-bin grids for Mountain Car (3,125 states) and 20-bin grids for Pendulum (1,600 states) using linear partitioning.

-

Q-Learning Core:

Implemented Bellman updates with terminal state handling:

Q(s,a) ← Q(s,a) + α[r + γ maxₐ’ Q(s’,a’) - Q(s,a)]

Used α=0.1 (decaying) and γ=0.99 for discounted returns. -

Exploration Strategy:

Deployed ε-decay from 1.0 to 0.01 over episodes:

- Mountain Car: Exponential decay to encourage early exploration

- Pendulum: Linear decay for steady policy refinement

-

Training & Evaluation:

Ran 5,000 episodes with periodic testing:

- Mountain Car: 1,000-step episode cap during evaluation

- Pendulum: Fixed 200-step episodes

Conclusion and Future Improvements

The Q-learning agent achieved average rewards of 94 (Mountain Car) and -280 (Pendulum), exceeding course targets. Future work could implement Deep Q-Networks (DQN) for continuous state handling, add prioritized experience replay, or integrate double Q-learning to mitigate maximization bias. Extending the state discretization granularity for Pendulum could further reduce torque oscillations.

Project Information

- Category: Design/Hardware

- Client: Rensselaer Polytechnic Institute

- Project date: 7 November, 2024